Background: The Importance of Context in AI Programming

If the “intelligence” of large models, such as Claude 3.5 Sonnet, is the key factor driving a stepwise leap in AI programming capabilities, the other critical factor is the context length.

Currently, Claude 3.5 Sonnet offers a maximum context length of 200k tokens. While this is more than sufficient for conversational models—capable of handling a 50,000 or even 100,000-word book with ease—it still falls far short for programming projects involving tens or hundreds of code files, each spanning hundreds or thousands of lines. Furthermore, with large models charging based on the number of input and output tokens, the marginal cost is not negligible.

These two characteristics have prompted AI programming tools like Cursor and Windsurf to implement numerous optimizations with the following objectives:

- Accurately extract task-relevant code to conserve context length, enabling multi-step task optimization and providing better user experiences.

- Minimize the reading of “unnecessary” code content, not only to improve task optimization but also to reduce costs.

Under the constraints and goals mentioned above, Cursor and Windsurf have adopted different optimization strategies to enhance their product experiences. However, such “optimizations” often involve trade-offs, yielding only locally optimal solutions and inevitably sacrificing certain aspects of the user experience.

The purpose of this article is to help both you and me understand the methods and logic behind their “optimizations.” By grasping the trade-offs involved in these adjustments, we can better leverage the strengths and weaknesses of different products. This understanding will allow us to switch tools and adapt usage methods in various scenarios, ultimately achieving the optimal solution for our tasks.

Conclusion: Windsurf for Getting Started, Cursor for Optimization

Based on recent experiences and a practical evaluation of Cursor 0.43.6 and Windsurf 1.0.7 on December 15, the following conclusions have been drawn:

1. For Beginners Performing Basic Tasks: Windsurf > Cursor Agent > Cursor Composer (Normal Mode)

In Agent mode, performance for executing basic tasks surpasses that of the standard Cursor Composer mode. This is because Agent mode interprets the task, scans the codebase, locates files, reads code, and performs step-by-step operations to complete the task.

Windsurf’s Agent shows better task understanding and multi-step execution capabilities compared to Cursor’s Agent in Composer mode.

2. Key Limitation of Agent Mode: Incomplete File Reading

This limitation affects complex projects and large code files.

- In Cursor’s Agent mode, the default is to read the first 250 lines of a file. If more is needed, it occasionally auto-extends by another 250 lines. For certain well-defined tasks, Cursor performs searches, with each search returning a maximum of 100 lines of code.

- Windsurf reads 200 lines per file by default and, if necessary, retries up to 3 times, reading up to 600 lines in total.

3. Cursor Outperforms Windsurf for Single File Operations

In Cursor, if you @ a specific file, it will attempt to read the file as completely as possible (tested up to 2000 lines).

In Windsurf, @ a file merely helps it locate the relevant file but does not prompt complete reading of that file. This is a key difference in logic between the two tools.

4. When You Understand the Project Structure: Cursor Performs Better with Single File Focus

If you know what you are doing and your task pertains to specific files, using @ to focus on a single file in Cursor yields much better results. Conversely, using @codebase does not ensure that Cursor will include all relevant code in the context. Instead, it uses a smaller model to analyze and summarize each file, leading to incomplete coverage of necessary code.

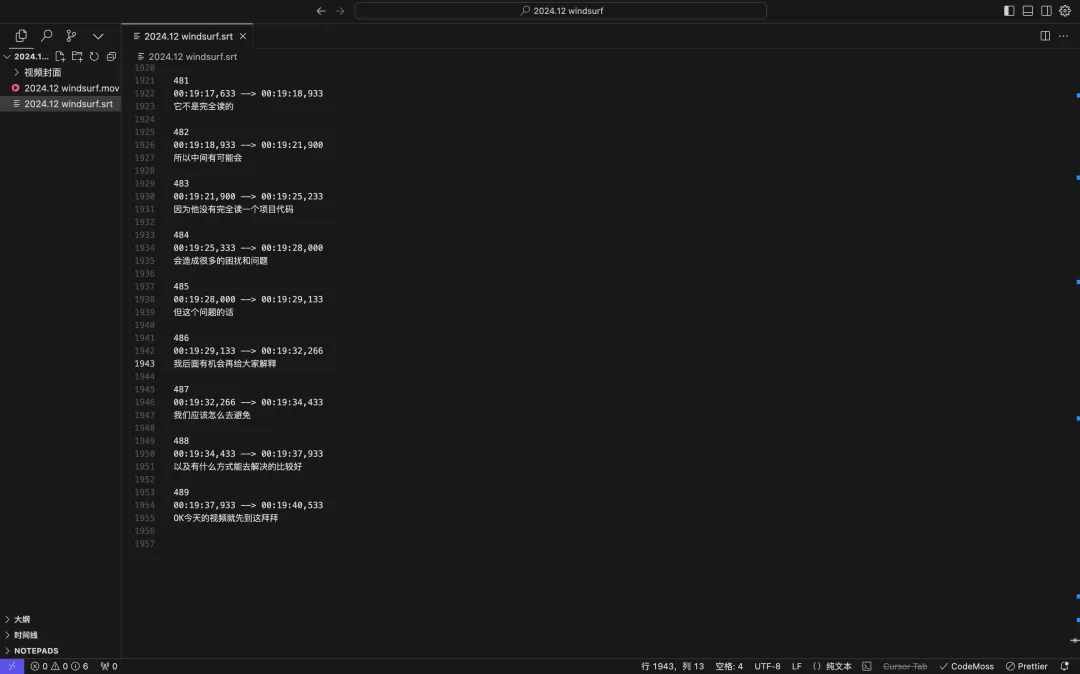

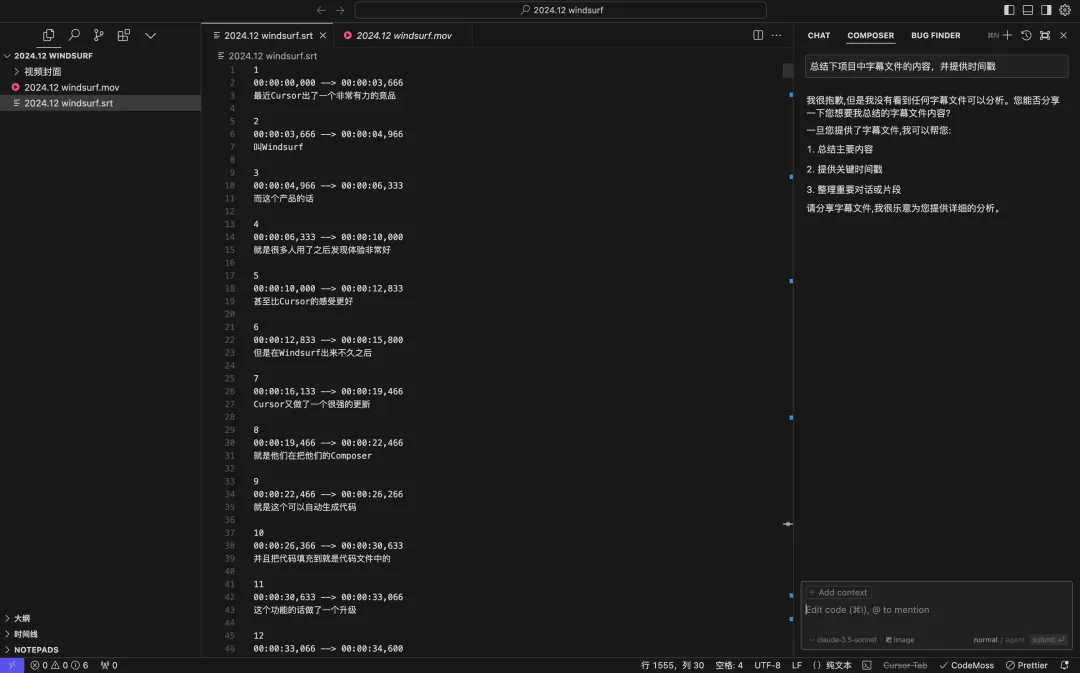

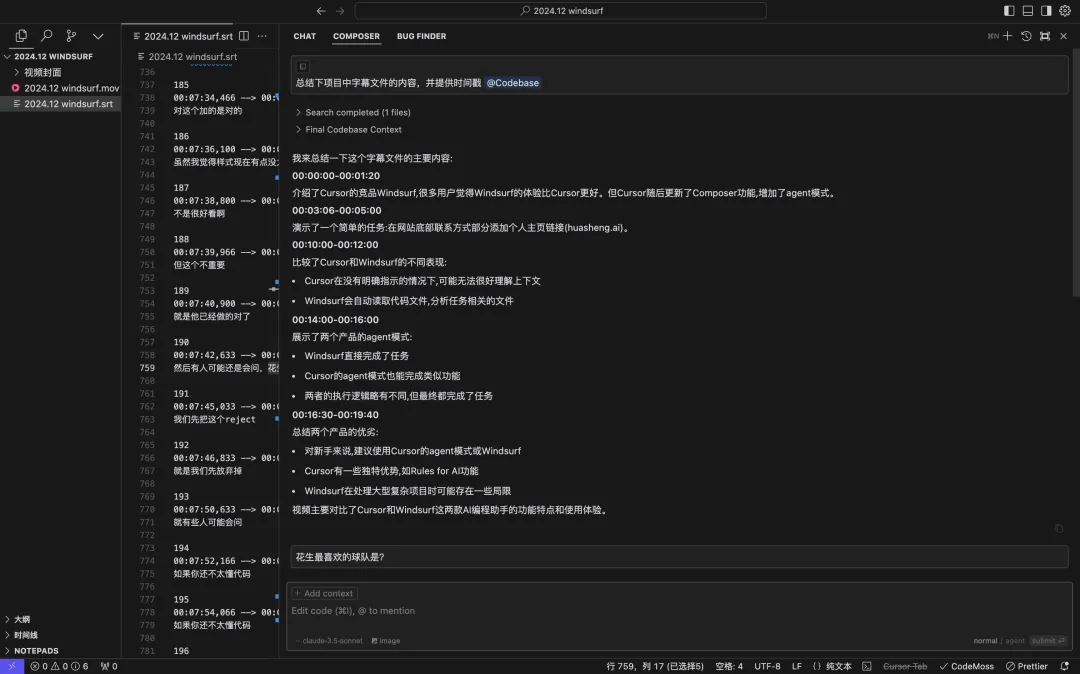

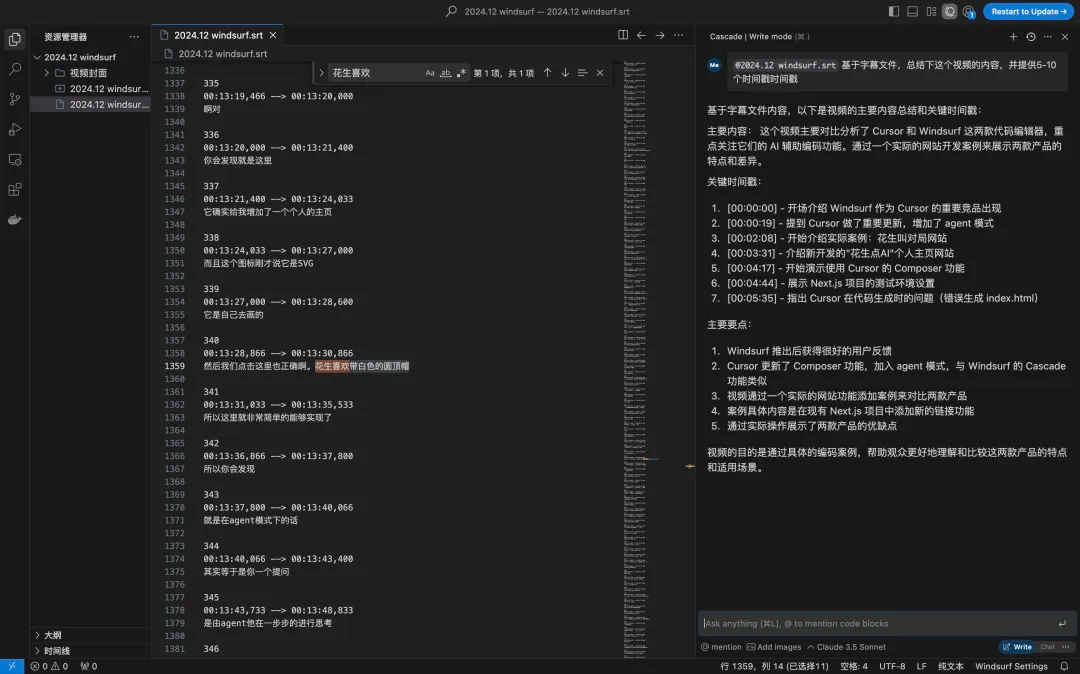

3. Testing Process

All the conclusions above are based on my extensive daily use of Cursor and Windsurf (500+ hours) and a targeted test. In this test, I used a video subtitle file with 1,955 lines. The subtitle file had timestamps and loosely coupled content, making it easy to determine whether the AI programming tools truly read the file and how much they read—it left no room for “guesswork.”

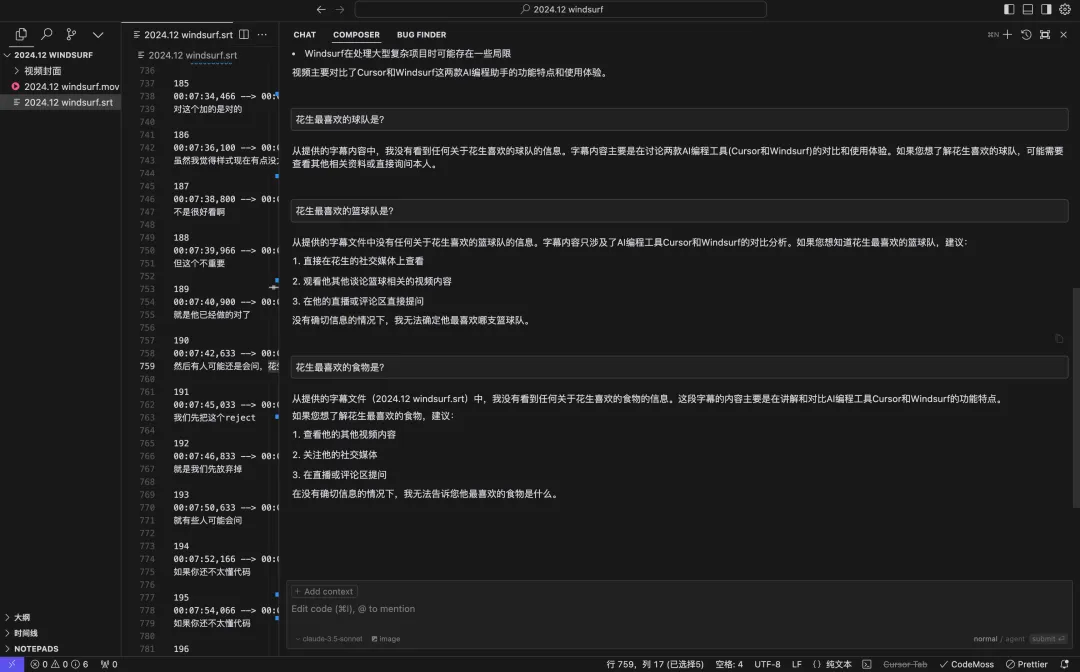

To ensure the tools were genuinely “reading” rather than summarizing via Retrieval-Augmented Generation (RAG), I randomly inserted unrelated content every 500 lines. These random insertions included:

• “Peanut’s favorite sport is tennis.”

• “Peanut’s favorite basketball team is the Lakers.”

• “Peanut likes to wear a white dome hat.”

• “Peanut’s favorite food is mantis shrimp.”

Test Rounds:

Round 1: Cursor Composer (Normal Mode)

Cursor did not actively locate or read the subtitle file, resulting in a failed task.

Round 2: Cursor Composer (Agent Mode)

In Agent mode, Cursor found and read the subtitle file but only read 250 lines.

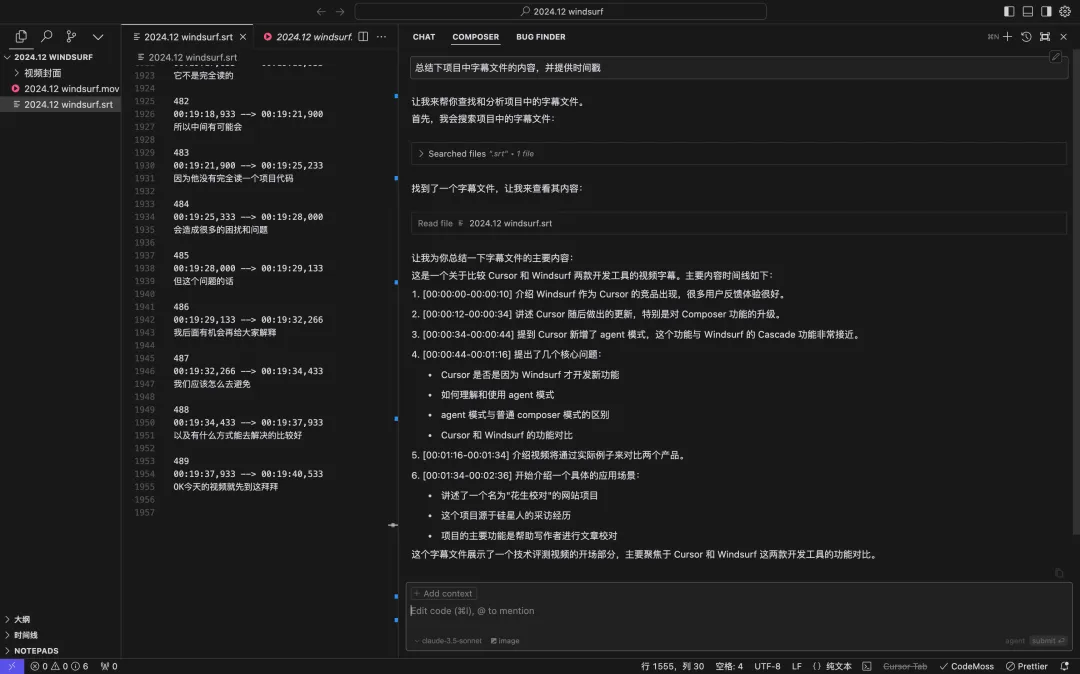

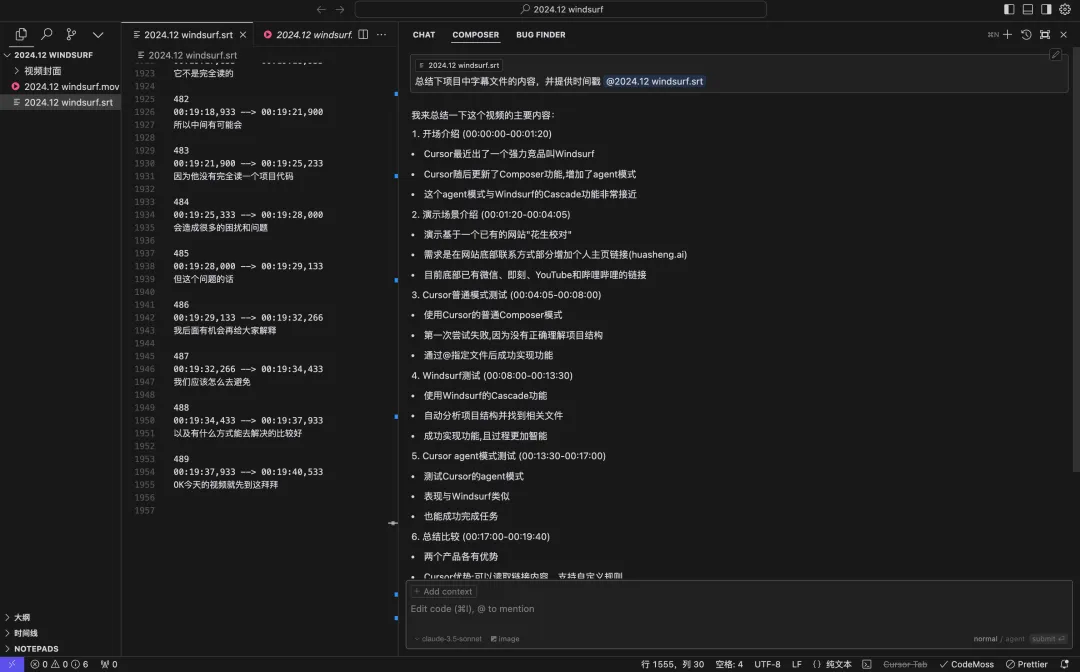

Round 3: Windsurf Cascade (Default Agent Mode)

Windsurf found and read the subtitle file, attempting to read it three times but only reaching 600 lines.

Round 4: Cursor Compose (Single File @ Mode)

By explicitly @ the file, Cursor completely read all 1,955 lines, returning accurate results for the first time. It also passed the random “trap” questions, confirming it genuinely read the content.

Round 5: Cursor Compose (@codebase Mode)

Cursor summarized the video’s content but failed all the trap questions. This suggests that in this mode, Cursor used a smaller model to perform multiple reads and returned only summarized information to the context.

Round 6: Windsurf Cascade (Single File @ Mode)

Explicitly @ the file in Windsurf still resulted in a summary of only 600 lines, confirming it did not fully read the file.

Recommendations for Using Cursor and Windsurf in Different Scenarios

- Keep each code file under 500 lines. This ensures the file remains within the range that Cursor Agent can read in two attempts.

- Clearly document the function and implementation logic of each code file in the first 100 lines. Use comments to make it easier for the Agent to index and understand the file’s purpose.

- For beginners or simple projects in the initial stages, Windsurf is more effective. Windsurf excels at handling straightforward tasks and projects for new users.

- For complex projects with files exceeding 600 lines. If you’re familiar with the project, understand your tasks, and know which code files are relevant, using Cursor and explicitly @ the corresponding files will yield the best results.

- Restart conversations frequently. For example, after completing a new feature or fixing a bug, restarting the interaction helps prevent long contexts from polluting the project.

- Regularly document your project’s state and structure in a dedicated file (e.g., README.md). This allows Cursor and Windsurf to quickly understand your project’s status when restarting conversations, minimizing the risk of bringing excessive or unnecessary context.

Note: This article is authorized by 花叔 to translate and republish. The original Chinese link: https://mp.weixin.qq.com/s/Fl-K-tdRuhlT9I-bcLbtdg