JSON (Javascript Object Notation), a prevailing data exchange format, is widely used in various platforms and languages. Golang, of course, will never miss the support for JSON. And with its own standard library, such as those interfaces like the REST API from the API Service in Kubernetes, it can easily process JSON.

Although Go’s library works great, we can still seek those open-source JSON libs in Github to maximize our efficiency. Then the features, performance, applicability of these libs are what we should put into consideration.

And here comes my “evaluation report.”

JSON In Go

There are two steps when manipulating JSON with Go’s self-contained encode/json package.

- Define the mapping. That is, mapping the field from struct to the corresponding JSON.

- Serialization, achieving the conversion between JSON string and struct with Marshal or Unmarshalmethods.

An example is as follows.

package main

import (

"encoding/json"

"fmt"

"os"

"time"

)

func main() {

type Customer struct {

Name string `json:"name"`

Created time.Time `json:"created"`

}

data := []byte(`

{

"name": "J.B",

"created": "2018-04-09T23:00:00Z"

}`)

var c Customer

if err := json.Unmarshal(data, &c); err != nil {

fmt.Print(err)

os.Exit(1)

}

fmt.Println(c.Name)

}Straightforward, right? It handles multi-layer-nested JSON data structures and uses map to deal with possibilities when dynamic keys exist in JSON.

Open-Source Go JSON Lib Status

Now that Go’s library looks perfect, then why are there so many third-party libs in Github?

Pull back our thoughts, and take a look at the JSON toolkits with over 1000 stars.

| JSON | stars | Users | createAt | last commit(yyyy-mm-dd) | contributers | issues |

|---|---|---|---|---|---|---|

| ffjson | 2.8K | 692 | 2015-05-20 | 2019-09-30 | 39 | 135 (80 closed) |

| jsoniter | 10K | 66.4K | 2016-11-30 | 2021-09-11 | 46 | 411 (286 closed) |

| easyjson | 3.4K | 21.4K | 2016-02-28 | 2021-10-10 | 80 | 172 (127 closed) |

| jsonparser | 4.2K | 1.8K | 2016-05-22 | 2021-06-10 | 47 | 112 (73 closed) |

| gojay | 2K | - | 2018-04-25 | 2019-06-11 | 17 | 72 (42 closed) |

| fastjson | 1.4K | - | 2018-05-28 | 2021-01-12 | 5 | 73(33 open, 40 closed) |

| simplejson-go | 1.2K | 97 | 2020-01-14 | 2021-06-21 | 7 | 13 (13 closed) |

| go-json | 1.2K | - | 2020-04-29 | 2021-10-16 | 13 | 91 (83 closed) |

We can easily read the popularity and activity of the 8 most popular GO JSON lib toolkits.

As early as 2014, the third-party JSON lib of ffjson was released, though just as a complement to the encode/json package. And then, in 2016, jsoniter and easyjson drew many developers, whose participation contributed a lot to the maturity and popularity of the two libs. Now to the simplejson-go and go-json, first onboard in 2020, their stars are boosting. But jsoniter still remains the most popular and is mentioned frequently in those articles on Go JSON.

After browsing their respective homepages (README), the answer to the question raised at the beginning of this section is found: they improve performance, speed up serialization, and reduce memory overhead. Pros are in general, and it is your effort to figure out which one fits you best and how you can make full use of it. To me, the following aspects matter.

- Performance, including complex JSON structure and serialization overhead under the dynamic structure.

- Adoption. Whether these libs’ API is as handy as the encode/json API.

- Features, which attracts users most. For example, simdjson-go and go-json may have something special that keeps them survival whenjsoniter is still in dominance.

Besides, studying and comparing their source codes and implementations can also help you make the right option.

The One With The Best Performance

The performance comparison is always intuitive and with a high reference value. Here we only list the 6 libs for ffjson and gojay had stopped updating since 2019.

We can stand on jsoniter’s benchmark test code and start our analysis by modifying or adding the benchmark test codes of the other five. Let’s go through the five rounds one by one.

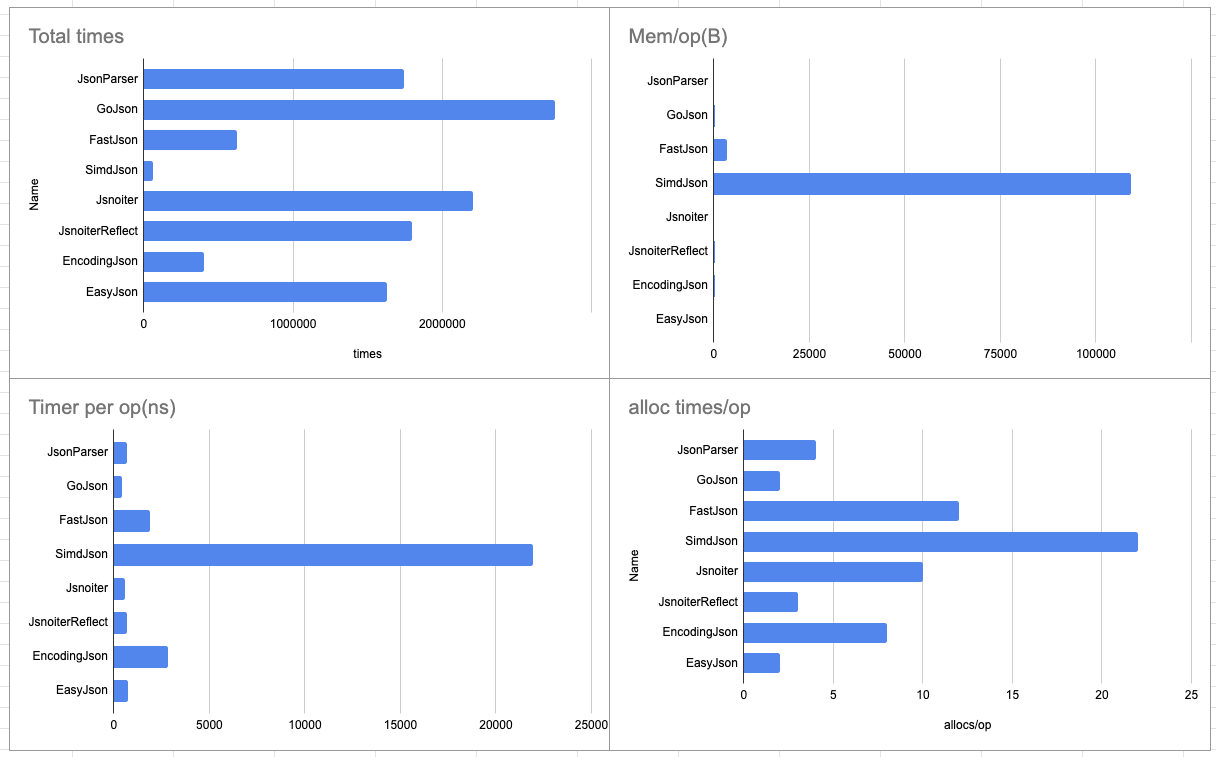

Small-Object Decode Performance

The code is simple and with seven test cases, including encode/json.

Are you shocked at the results? At least, I am.

First, the simdjson-go package originating from C++ turns out the least efficient, even beaten by native encode/json. I have limited knowledge about this lib, and let alone using experience. And unluckily, the examples in the document I found after the one-hour search cannot be used directly, and never to mention that only one piece of code is worth reference.

What’s more, its various benchmark tests are all MarshalJson, which confused me and made me wonder the essential meaning of accelerating the output after the JSON string is read. For example, this test. Am I missing the key to its door?

func BenchmarkIter_MarshalJSONBuffer(b *testing.B) {Klaus Post, 2 years ago: • Add API for parsed JSON

if !SupportedCPU() {

b.SkipNow()

}

for _, tt := range testCases {

b.Run(tt.name, func(b *testing.B) {

ref := loadCompressed(b, tt.name)

pj, err := Parse(ref, nil)

if err != nil {

b.Fatal(err)

}

iter := pj.Iter()

cpy := iter

output, err := cpy.MarshalJSON()

if err != nil {

b.Fatal(err)

}

b.SetBytes(int64(len(output)))

b.ReportAllocs()

b.ResetTimer()

for i := 0; i < b.N; i++ {

cpy := iter

output, err = cpy.MarshalJSONBuffer(output[:0])

if err != nil {

b.Fatal(err)

}

}

})

}

}Anyway, I sort of understanding its core, which uses a tree-like structure to parse JSON and contains various node types, similar to the way of parsing YAML and XML files. But if you are searching for a key with for loop, never consider it! I have to admit that I give it up and rule it out in the subsequent test cases.

And then, who did the best in speed? The go-json lib is easy to use, and its functions share the same name as encode/json. Of course, it succeeds to the next round.

As to easyjson, jsoniter, and jsonparser, they are nip-and-tuck in memory allocation. But easyjson still wins the best overall performance with the smallest memory usage and the least allocations. However, easyjson is not so user-friendly since a parsing code (benchmark_easyjson.go )generated by the easyjson command line is needed before use.

Medium-Object Decode Performance

With simdjson-go out, now only five third-party libs and encode/json are in this performance test.

The string of Medium is as follows, and the test code is similar.

var mediumFixture []byte = []byte(`{

"person": {

"id": "d50887ca-a6ce-4e59-b89f-14f0b5d03b03",

"name": {

"fullName": "Leonid Bugaev",

"givenName": "Leonid",

"familyName": "Bugaev"

},

"email": "leonsbox@gmail.com",

"gender": "male",

"location": "Saint Petersburg, Saint Petersburg, RU",

"geo": {

"city": "Saint Petersburg",

"state": "Saint Petersburg",

"country": "Russia",

"lat": 59.9342802,

"lng": 30.3350986

},

"bio": "Senior engineer at Granify.com",

"site": "http://flickfaver.com",

"avatar": "https://d1ts43dypk8bqh.cloudfront.net/v1/avatars/d50887ca-a6ce-4e59-b89f-14f0b5d03b03",

"employment": {

"name": "www.latera.ru",

"title": "Software Engineer",

"domain": "gmail.com"

},

"facebook": {

"handle": "leonid.bugaev"

},

"github": {

"handle": "buger",

"id": 14009,

"avatar": "https://avatars.githubusercontent.com/u/14009?v=3",

"company": "Granify",

"blog": "http://leonsbox.com",

"followers": 95,

"following": 10

},

"twitter": {

"handle": "flickfaver",

"id": 77004410,

"bio": null,

"followers": 2,

"following": 1,

"statuses": 5,

"favorites": 0,

"location": "",

"site": "http://flickfaver.com",

"avatar": null

},

"linkedin": {

"handle": "in/leonidbugaev"

},

"googleplus": {

"handle": null

},

"angellist": {

"handle": "leonid-bugaev",

"id": 61541,

"bio": "Senior engineer at Granify.com",

"blog": "http://buger.github.com",

"site": "http://buger.github.com",

"followers": 41,

"avatar": "https://d1qb2nb5cznatu.cloudfront.net/users/61541-medium_jpg?1405474390"

},

"klout": {

"handle": null,

"score": null

},

"foursquare": {

"handle": null

},

"aboutme": {

"handle": "leonid.bugaev",

"bio": null,

"avatar": null

},

"gravatar": {

"handle": "buger",

"urls": [

],

"avatar": "http://1.gravatar.com/avatar/f7c8edd577d13b8930d5522f28123510",

"avatars": [

{

"url": "http://1.gravatar.com/avatar/f7c8edd577d13b8930d5522f28123510",

"type": "thumbnail"

}

]

},

"fuzzy": false

},

"company": null

}`)func BenchmarkDecodeStdStructMedium(b *testing.B) {

b.ReportAllocs()

var data MediumPayload

for i := 0; i < b.N; i++ {

json.Unmarshal(mediumFixture, &data)

}

}

// jsoniter

func BenchmarkDecodeJsoniterStructMedium(b *testing.B) {

b.ReportAllocs()

var data MediumPayload

for i := 0; i < b.N; i++ {

jsoniter.Unmarshal(mediumFixture, &data)

}

}

...For testingjsonparser, I take two fields only when failing to convert to struct MediumPayload directly. Let’s see the test results.

As expected, the standard library encode/json is the slowest. Nevertheless, fastjson is 1.60 times faster than the standard though, it consumes 30 times the memory, allocates 5 times more objects, and all test results are worse than other libs. I will not choose fastjson because the speed increased cannot cover its bad performance in other aspects. In addition, its API does not support the conversion of JSON string to a struct but gets each field by the Get* method, which means it is not friendly for the struct when the field is clear.

And who is the winner? jsonparser, which is streets ahead of its rivals in almost every metric, with the fastest speed, the smallest memory cost, and the most GC-friendly!

Large-Object Decode Performance

Now to the arena of large string parsing, and the test object from easyjson, and five test codes are used. The string is too long to present here, and the code is similar to the above.

Let’s check the results.

jsonparser triumphs again with the fastest speed and the least memory usage, but this is partly due to my incomplete analysis of the entire struct. However, it does beat jsoniter in the same scenario(picking two fields).

And comparing easyjson with go-json, both of which can directly convert JSON into a struct object, easyjson undoubtedly better with 50% faster, and only one-fourth of the latter’s memory allocation.

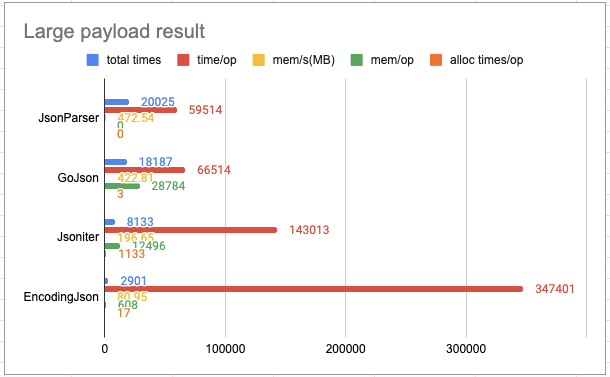

Large Payload Decode Performance

This step will test the libs’ respective performance when parsing a JSON array containing multiple elements. And the test data is composed of dozens of the JSON strings like below in an array.

{"id":-1,"username":"system","avatar_template":"/user_avatar/discourse.metabase.com/system/{size}/6_1.png"}

And only jsonparser, go-json, jsoniter and encode/json are included but not easyjson , because I can’t generate the corresponding ffjson file with easyjson, one of the troubles I mentioned when adoptingeasyjson.

Below shows the results.

What is the difference this time? The performance of encode/json is exceptional. Although it is still slow, the memory overhead is not large, far better than jsoniter and go-json. Moreover, encode/json can completely transform the object, which is beneficial.

But what surprised me most is that jsoniter is slower than go-json and higher in overhead.

Object Encode Performance

After the above four decode tests, I want to see how they perform in converting from objects to JSON. Thus I do the test with the conversion data from the above medium decode test. And jsonparser is excluded for it supports only decode but not encode.

Here are the test results.

By contrast, the libs’ performances in encode test is surprisingly close, and even encode/json keeps up with the team, while go-json is once again the first-rate.

Which One To Choose

I believe you must have your own ranking in your mind after the “five contests.” The jsoniter and easyjson should have done better because almost all the test cases are from them. The following table visualizes the test results, showing how their performances break my expectation.

In fact, easy-to-use is as important as the performance when we select a lib. With this regard, all of go-json, jsoniter and fastjson can directly replace the Unmarshal and Marshal methods of encode/json, bringing convenience. And in the case of more parsing, jsoniteris the first option, and while in the case of more decode, go-json wins.

While regarding jsonparser, though it has the fastest parsing speed, I still will consider it only with small objects or data amount object parsing because I need to define the JSON path instead of directly using the annotations in the struct, and I don’t want to spend too much time in maintaining an additional [][]string array. Besides, it is decode-only, so the other libs are better choices when both decode and encode are in need.

easyjson has an excellent performance in parsing various-size objects, but there is still a concern that additional files are needed to support parsing. Sometimes the straw that broke the camel’s back is so tiny, but still easyjson is abandoned by some users unless they have higher requirements for parsing performance, and not much decode involved.

Finally, don’t we forget the encode/json, which has its own advantages. First of all, no additional libs are needed, and Go itself can do everything, especially for those who do not need optimal performance. Second, as is shown in the processing of large-data-volume JSON array, it behaves in line with the cloud’s cost-effective feature and is suitable for none-time-concerning cronjob processing.

Dig Into The Implementations

How does jsoniter speed up the parsing? What is different from go-json? And Why does jsonparser beat the others in parsing? At this point, nothing can stop me from diving into their implementation details.

The reasons why every third-party JSON library is faster than encode/json are similar.

- Less or no reflection. Most of them use unsafe.Pointer to process data, avoiding slow reflection.

- Full use of the cache. This reduces the overhead of object creation and destruction, and each lib might have its own way.

jsoniter

After a quick read of the source code, I discovered some methods to improve jsoniter’s performance and memory efficiency.

- Scan the input JSON string by stream , and parse the data into the internal Iterator objects.

- Save some cache to reduce copying in the Iterator objects. When parsing the field name of the object, no new bytes are allocated to save the field name. Instead, if possible, the buffer will be reused as a slice. source code

func (cfg *frozenConfig) DecoderOf(typ reflect2.Type) ValDecoder { cacheKey := typ.RType() decoder := cfg.getDecoderFromCache(cacheKey) if decoder != nil { return decoder } ctx := &ctx{ frozenConfig: cfg, prefix: "", decoders: map[reflect2.Type]ValDecoder{}, encoders: map[reflect2.Type]ValEncoder{}, } ptrType := typ.(*reflect2.UnsafePtrType) decoder = decoderOfType(ctx, ptrType.Elem()) cfg.addDecoderToCache(cacheKey, decoder) return decoder }​

- Parse each type directly. jsoniter parses the entire struct in advance, analyzes the type of each field, sets the corresponding decoder, and then directly uses the corresponding decoder when Iterator traverses. For example, the number has a corresponding decoder, then no conversion into a string is needed. source code

decoder := createDecoderOfJsonRawMessage(ctx, typ) if decoder != nil { return decoder } decoder = createDecoderOfJsonNumber(ctx, typ) if decoder != nil { return decoder } decoder = createDecoderOfMarshaler(ctx, typ) if decoder != nil { return decoder }​

- Correspond encoder to different types, and then cache, avoiding frequent creation and destruction.

Finally, we should still talk about jsoniter’s defects. It obviously consumes more memory than native lib and allocates more in parsing the large payload JSON string because it reads all JSON into an Iterator and parses each token through a loop. source code

for c = iter.nextToken(); c == ','; c = iter.nextToken() {

if length >= arrayType.Len() {

iter.Skip()

continue

}

idx := length

length += 1

elemPtr = arrayType.UnsafeGetIndex(ptr, idx)

decoder.elemDecoder.Decode(elemPtr, iter)

}In comparison, encode/json starts parsing only when the string is recursed into the minimum parsing unit, lowering both the speed and the overhead.

func (d *decodeState) array(v reflect.Value) error {

// Check for unmarshaler.

u, ut, pv := indirect(v, false)

if u != nil {

start := d.readIndex()

d.skip()

return u.UnmarshalJSON(d.data[start:d.off])

}

...

}go-json

The strongest point of go-json is that it is 100% compatible with encode/json, and does good in the speed due to the cache! And the explanation lies on its front page.

Buffer reuse

There is a technique to reduce the number of times a new buffer must be allocated by reusing the buffer used for the previous encoding by using sync.Pool.

Finally, you allocate a buffer that is as long as the resulting buffer and copy the contents into it, you only need to allocate the buffer once in theory.

Just like jsoniter, it also categorizes different types of decoders and caches them.

func CompileToGetDecoder(typ *runtime.Type) (Decoder, error) {

typeptr := uintptr(unsafe.Pointer(typ))

if typeptr > typeAddr.MaxTypeAddr {

return compileToGetDecoderSlowPath(typeptr, typ)

}

index := (typeptr - typeAddr.BaseTypeAddr) >> typeAddr.AddrShift

if dec := cachedDecoder[index]; dec != nil {

return dec, nil

}

dec, err := compileHead(typ, map[uintptr]Decoder{})

if err != nil {

return nil, err

}

cachedDecoder[index] = dec

return dec, nil

}But go-json is slower than jsoniter, and the possible reasons may be:

- go-json takes a lot of operations likecopy(src, data), but not jsoniter’s streaming input analysis, reducing the speed and increasing the memory overhead.

- Many slice copies are used in the code.

if u != nil { start := d.readIndex() d.skip() return u.UnmarshalJSON(d.data[start:d.off]) }​

While, from another point of view, go-json has a more reasonable, clearer, and readable code structure, which I prefer.

Besides, many tiny details can also improve efficiency, such as using bit op more, e.g. the above CompileToGetDecoder function contains one. And many other examples here.

index := (typeptr - typeAddr.BaseTypeAddr) >> typeAddr.AddrShiftjsonparser

Going through all the performance tests again, you will see that jsonparser is outstanding in almost all but fails in encoding. My curiosity draws me to find the reason from its official documentation.

Operates with JSON payload on byte level, providing you pointers to the original data structure: no memory allocation.

No automatic type conversions, by default everything is a []byte, but it provides you value type, so you can convert by yourself (there is few helpers included).

Does not parse full record, only keys you specified.

Basically, it is the third point that makes it so fast in the performance, while the first one is also achieved in jsoniter, and the second is what many libs are doing. And in the test code, libs like jsoniter and go-json convert the entire JSON string to a struct object, when jsonparseronly takes 2–3 field values.

Now let’s look at its source code, which is more direct and simple. Let’s take the EachKey method as an illustration, and the two steps are

- EachKey finds the startIndex of the key. Distinguishing the Array([) and the ordinary value(:) is the most complex part.

if data[i] == ':' { match := -1 key := data[keyBegin:keyEnd] // for unescape: if there are no escape sequences, this is cheap; if there are, it is a // bit more expensive, but causes no allocations unless len(key) > unescapeStackBufSize var keyUnesc []byte if !keyEscaped { keyUnesc = key } else { var stackbuf [unescapeStackBufSize]byte if ku, err := Unescape(key, stackbuf[:]); err != nil { return -1 } else { keyUnesc = ku } } if maxPath >= level { if level < 1 { cb(-1, nil, Unknown, MalformedJsonError) return -1 } pathsBuf[level-1] = bytesToString(&keyUnesc) for pi, p := range paths { if len(p) != level || pathFlags[pi] || !equalStr(&keyUnesc, p[level-1]) || !sameTree(p, pathsBuf[:level]) { continue } match = pi pathsMatched++ pathFlags[pi] = true v, dt, _, e := Get(data[i+1:]) cb(pi, v, dt, e) if pathsMatched == len(paths) { break } } if pathsMatched == len(paths) { return i //return the index here! } } ... }​ - After returning to the index when the startIndex is found, let the user determine to which to convert, string, int, or others since this part of the logic cannot be automatically overwritten.

Therefore, jsonparser is largely limited and can only be used in certain situations. For example, reading the fixed key in the JSON parameter is a perfect case to secure the API inputs.

Summary

After studying the most popular JSON frameworks, I have had an overall picture of their principles, pros, and cons and formed an evaluation report in mind. If to give a suggestion to general users, I will say go-json, whose performance surpasses encode/json, and no additional learning required, conforming to Go’s philosophy, simplicity first!

PS, if you are interested, follow the test codes here.

Note: The post is authorized by original author to republish on our site. Original author is Stefanie Lai who is currently a Spotify engineer and lives in Stockholm, orginal post is published here.